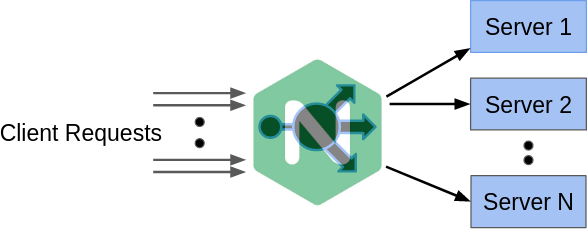

Figure 1: NGINX load balancer distributing the requests uniformly

A load balancer is used to distribute the incoming traffic to multiple web servers. We use load balancer when single web server is not able to handle all the traffic.

NGINX provides load balancing ability along with other features. In this experiment we will see how to use NGINX for load balancing and run tests to see how it performs.

Tools required to run the experiments: docker, docker-compose, httperf, curl(optional)

Web server without load balancer:

I have created a docker image with a simple HTTP server. This service replies with a fixed message and prints how many requests it received to the console.

To mimic the server side work, this server adds a delay of 0.01s before replying.

To run this web server we use the command:

sudo docker run --rm -p 8080:8080 -e "SERVER_NAME=server1" gbhat1/hello_http_server:latest

We can now use a web browser to reach http://localhost:8080 or we can use a command line tool like curl. To use curl we run the command:

curl -v http://localhost:8080

Now let us run httperf to see the performance of this web server. The below command sends 1000 requests per second for 10 seconds.

httperf --hog --server localhost --uri / --port 8080 --num-conn 10000 --num-call 1 --timeout 5 --rate 1000

The output will depend on the computational power of the system. The below was one of the outputs.

We can see that, server is able to handle ~666 connections/sec and an average of ~765 replies/sec.

httperf --hog --timeout=5 --client=0/1 --server=localhost --port=8081 --uri=/ --rate=1000 --send-buffer=4096 --recv-buffer=16384 --num-conns=10000 --num-calls=1 httperf: warning: open file limit > FD_SETSIZE; limiting max. # of open files to FD_SETSIZE Maximum connect burst length: 15 Total: connections 9661 requests 9661 replies 8168 test-duration 14.497 s Connection rate: 666.4 conn/s (1.5 ms/conn, <=1022 concurrent connections) Connection time [ms]: min 2.3 avg 245.7 max 4732.6 median 8.5 stddev 813.6 Connection time [ms]: connect 0.0 Connection length [replies/conn]: 1.000 Request rate: 666.4 req/s (1.5 ms/req) Request size [B]: 62.0 Reply rate [replies/s]: min 764.4 avg 765.2 max 766.1 stddev 1.2 (2 samples) Reply time [ms]: response 244.4 transfer 1.3 Reply size [B]: header 116.0 content 8.0 footer 0.0 (total 124.0) Reply status: 1xx=0 2xx=8168 3xx=0 4xx=0 5xx=0 CPU time [s]: user 0.39 system 12.90 (user 2.7% system 89.0% total 91.6%) Net I/O: 108.6 KB/s (0.9*10^6 bps) Errors: total 1832 client-timo 1493 socket-timo 0 connrefused 0 connreset 0 Errors: fd-unavail 339 addrunavail 0 ftab-full 0 other 0

Web servers with load balancer:

Now let us run the experiment with 3 web servers and a load balancer.

I have created a docker image with NGINX configured as a load balancer. The docker image is using below configuration.

We add the web server names and port details in upstream app_servers configuration. More details about the configuration can be found at: https://www.nginx.com/resources/wiki/start/topics/examples/full/

worker_processes 4;

events { worker_connections 2048; }

http {

access_log off;

# List of application servers

upstream app_servers {

server server1:8080;

server server2:8080;

server server3:8080;

}

# Configuration for the server

server {

# Running port

listen 8080;

# Proxying the connections connections

location / {

proxy_pass http://app_servers;

}

}

}

We use docker-compose to start NGINX and 3 web servers. We run the docker-compose command like this:

docker-compose -f nginx-docker-compose.yml up

nginx-docker-compose.yml:

version: '3'

services:

server1:

image: gbhat1/hello_http_server:latest

environment:

"SERVER_NAME": "server1"

server2:

image: gbhat1/hello_http_server:latest

environment:

"SERVER_NAME": "server2"

server3:

image: gbhat1/hello_http_server:latest

environment:

"SERVER_NAME": "server3"

nginx:

image: gbhat1/nginx_lb_uniform:stable-alpine

ports:

- "8080:8080"

links:

- server1

- server2

- server3

depends_on:

- server1

- server2

- server3

If we use browser or curl to access http://localhost:8080 we can see each request is going to different web servers. We can send 10 requests using curl with below command:

seq 10 | xargs -Iz curl http://localhost:8080

Let us run httperf to see the performance of this new setup:

httperf --hog --server localhost --uri / --port 8080 --num-conn 10000 --num-call 1 --timeout 5 --rate 1000

This output may differ between systems. Below is one of the output which shows, server is able to handle all 1000 connections/sec and an average of ~999.9 replies/sec.

httperf --hog --timeout=5 --client=0/1 --server=localhost --port=8080 --uri=/ --rate=1000 --send-buffer=4096 --recv-buffer=16384 --num-conns=10000 --num-calls=1 httperf: warning: open file limit > FD_SETSIZE; limiting max. # of open files to FD_SETSIZE Maximum connect burst length: 7 Total: connections 10000 requests 10000 replies 10000 test-duration 10.000 s Connection rate: 1000.0 conn/s (1.0 ms/conn, <=14 concurrent connections) Connection time [ms]: min 0.9 avg 2.2 max 24.6 median 1.5 stddev 1.3 Connection time [ms]: connect 0.0 Connection length [replies/conn]: 1.000 Request rate: 1000.0 req/s (1.0 ms/req) Request size [B]: 62.0 Reply rate [replies/s]: min 999.8 avg 999.9 max 1000.1 stddev 0.2 (2 samples) Reply time [ms]: response 2.2 transfer 0.0 Reply size [B]: header 155.0 content 8.0 footer 2.0 (total 165.0) Reply status: 1xx=0 2xx=10000 3xx=0 4xx=0 5xx=0 CPU time [s]: user 2.87 system 4.23 (user 28.7% system 42.3% total 71.0%) Net I/O: 219.7 KB/s (1.8*10^6 bps) Errors: total 0 client-timo 0 socket-timo 0 connrefused 0 connreset 0 Errors: fd-unavail 0 addrunavail 0 ftab-full 0 other 0

These experiments show the way we can use NGINX as load balancer to increase the throughput of the system.